|

I am an Assistant Professor at the Artificial Intelligence Innovation and Incubation Institute of Fudan Univerisity. I obtained my PhD in Computer Science at the University of Chicago, where I was fortunate to be advised by Professor Rebecca Willett. Prior to that, I received my master's degree in Operations Research at Columbia University and bachelor's degree at Xi'an JiaoTong University. |

|

|

My research interests lie in representation learning and generative modeling, with applications in AI for Science and computer vision. In particular, I focus on developing hierarchical generative models that leverage semantic or physical embeddings to capture and preserve key properties of high-dimensional data. My work has been applied to long-term prediction of dynamical systems, uncertainty-aware solutions to inverse problems, and the development of high-performance semantic generative models for complex scenes. Currently, I am especially interested in long-sequence prediction tasks, including video generation and time series modeling. |

|

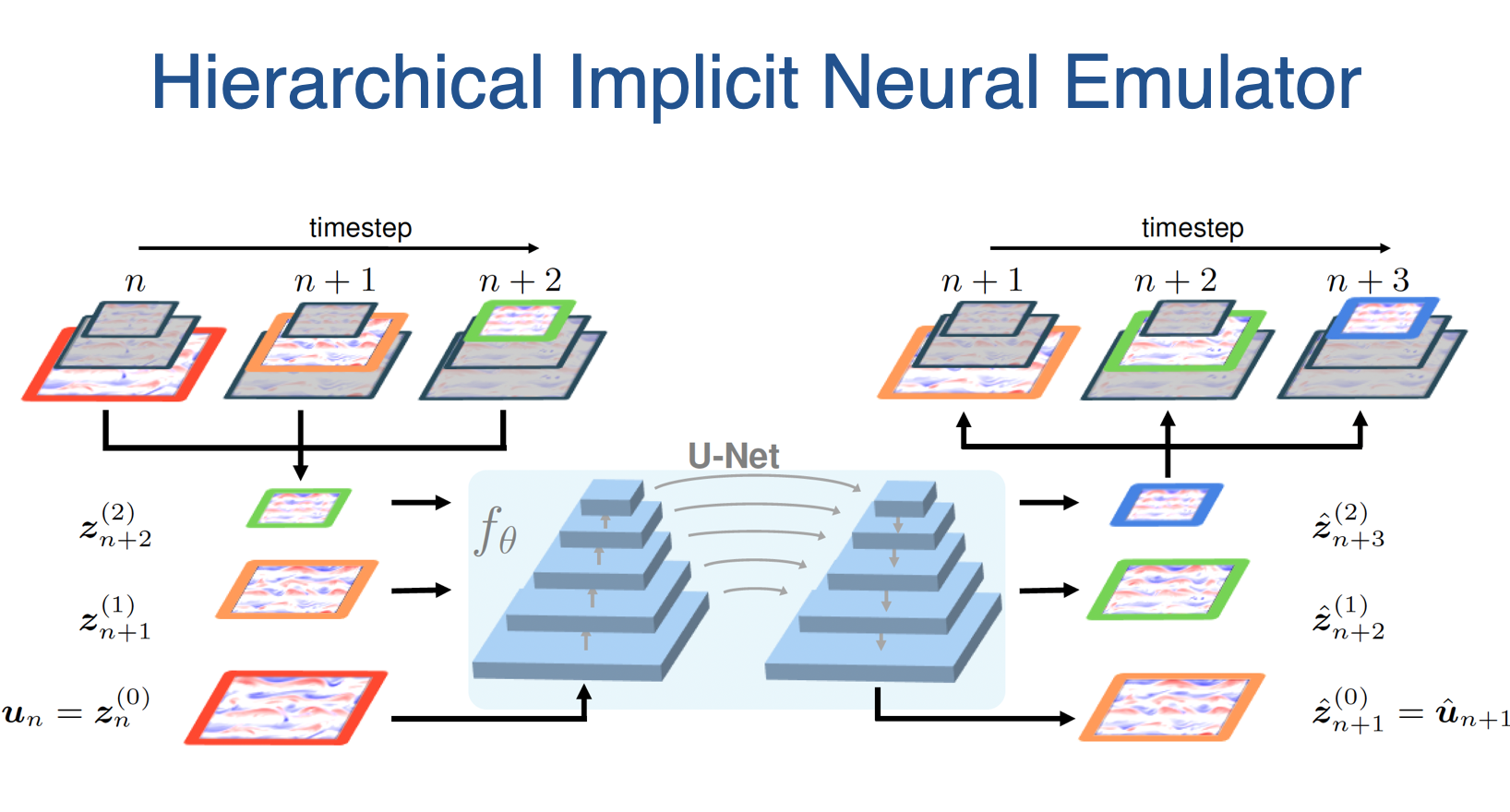

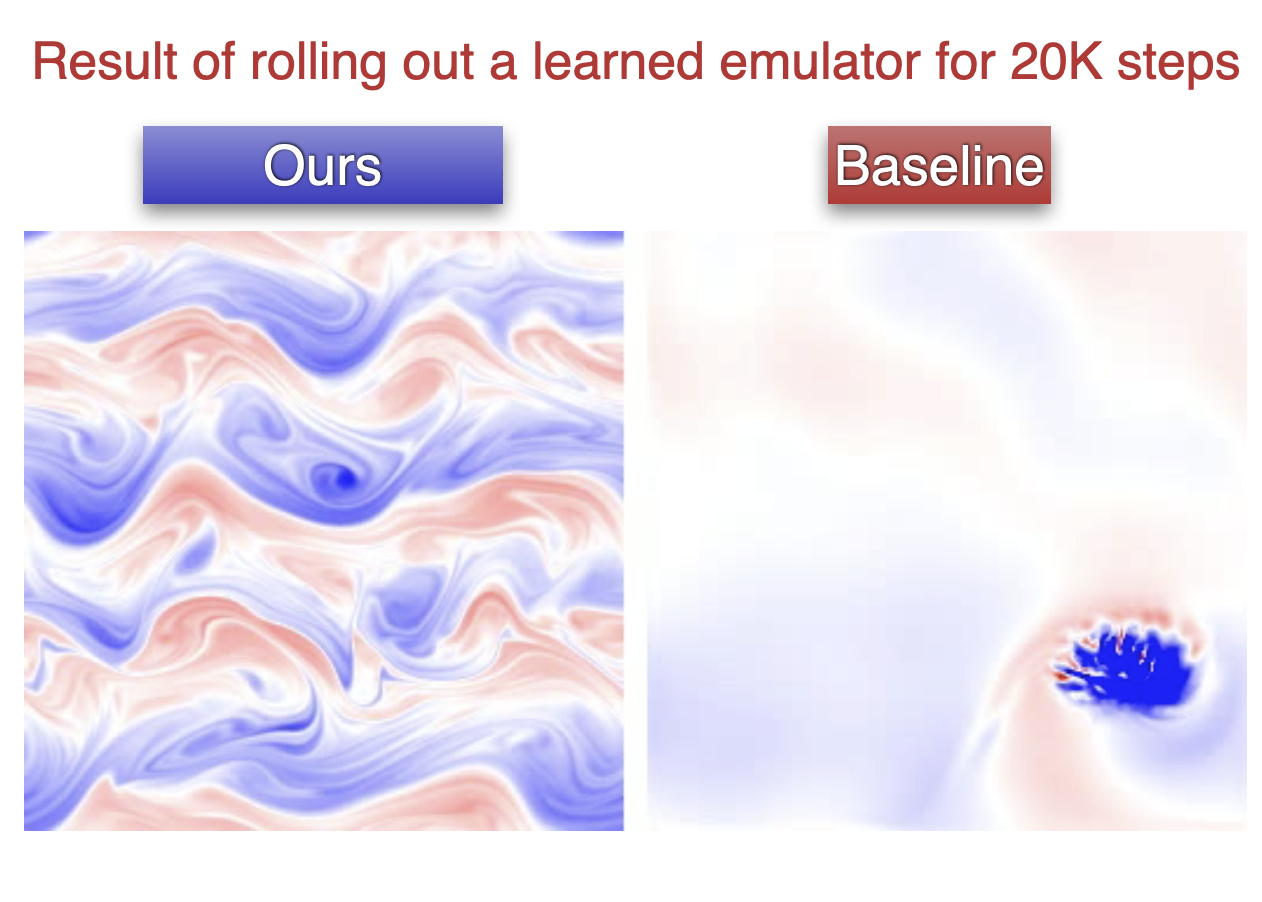

Ruoxi Jiang, Xiao Zhang, Karan Jakhar, Peter Y. Lu, Pedram Hassanzadeh, Michael Maire Rebecca Willett NeurIPS 2025 We propose a novel emulator that uses an augmented state built from a hierarchy of coarse-grained representations of future states. This approach captures multiscale interactions and — by autoregressively applying the emulator to the augmented state — efficiently mimics the iterative refinement mechanism of implicit solvers, achieving better accuracy and stability. |

|

Ruoxi Jiang*, Peter Y. Lu*, Rebecca Willett In review We introduce a novel likelihood-free inference method based on contrastive learning that efficiently handles high-dimensional data and complex, multimodal parameter posteriors. |

|

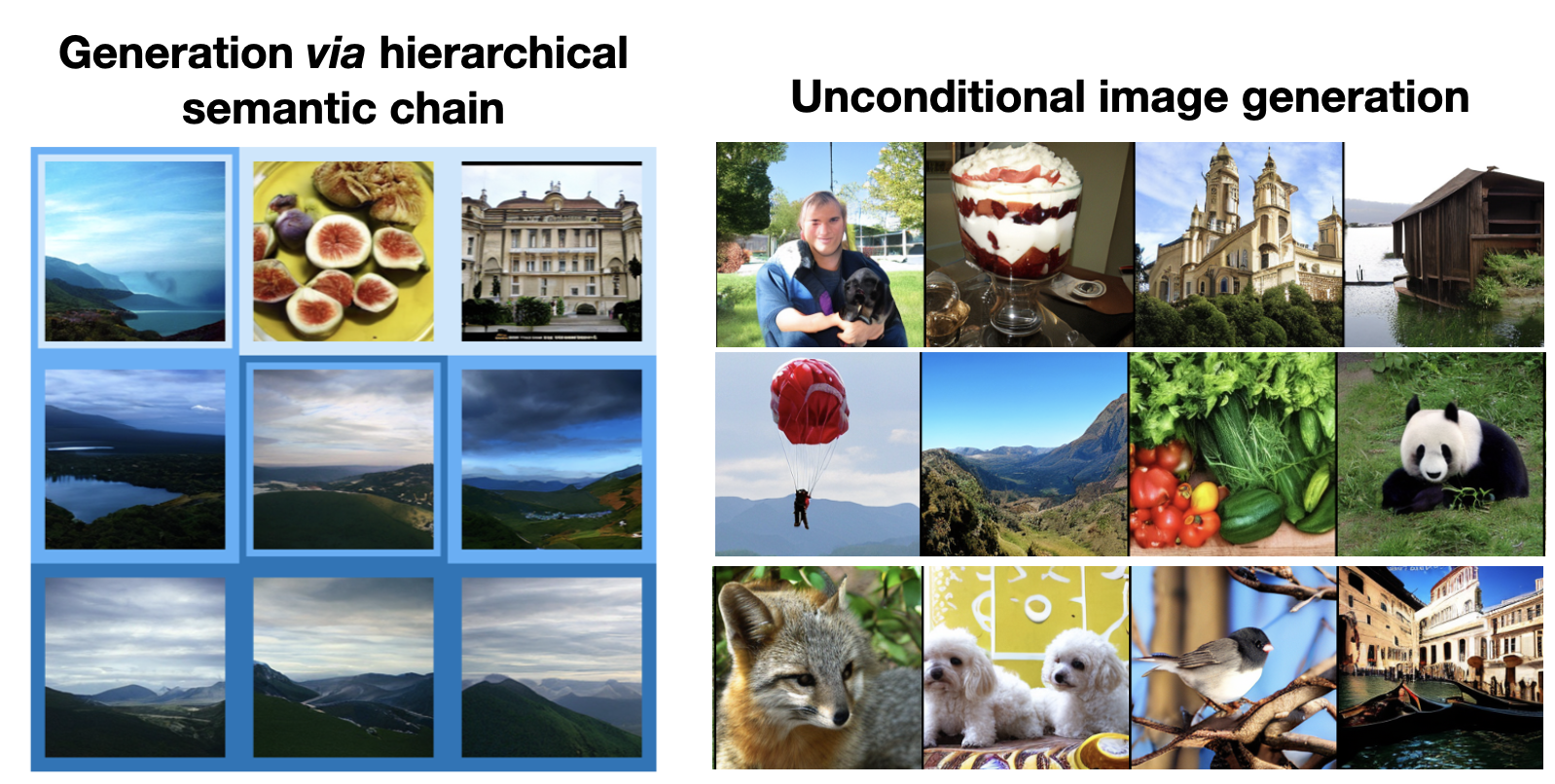

Xiao Zhang*, Ruoxi Jiang*, Rebecca Willett, Michael Maire CVPR 2025 We introduce nested diffusion models, an efficient and powerful hierarchical generative framework that substantially enhances the generation quality of diffusion models, particularly for images of complex scenes. |

|

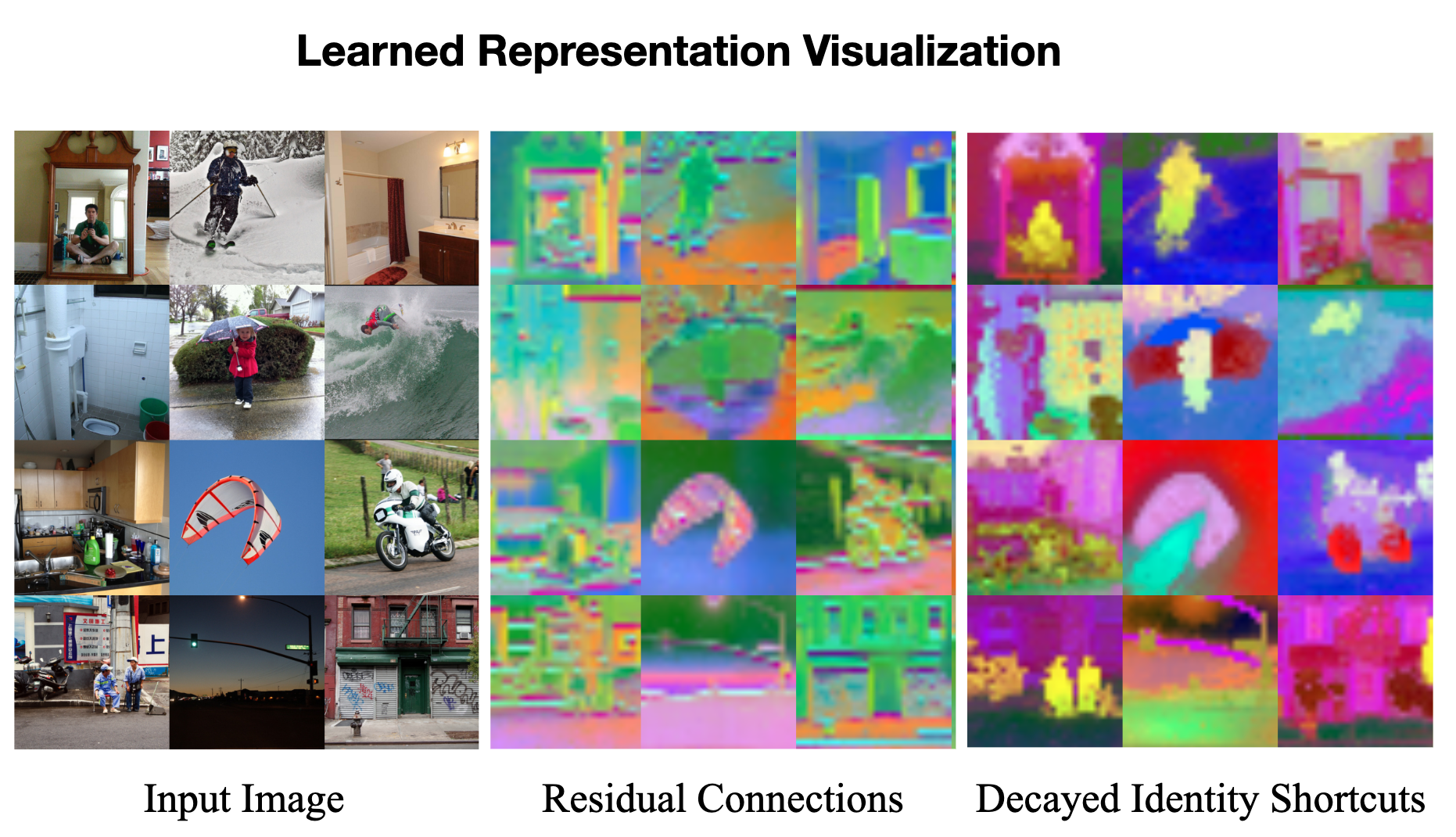

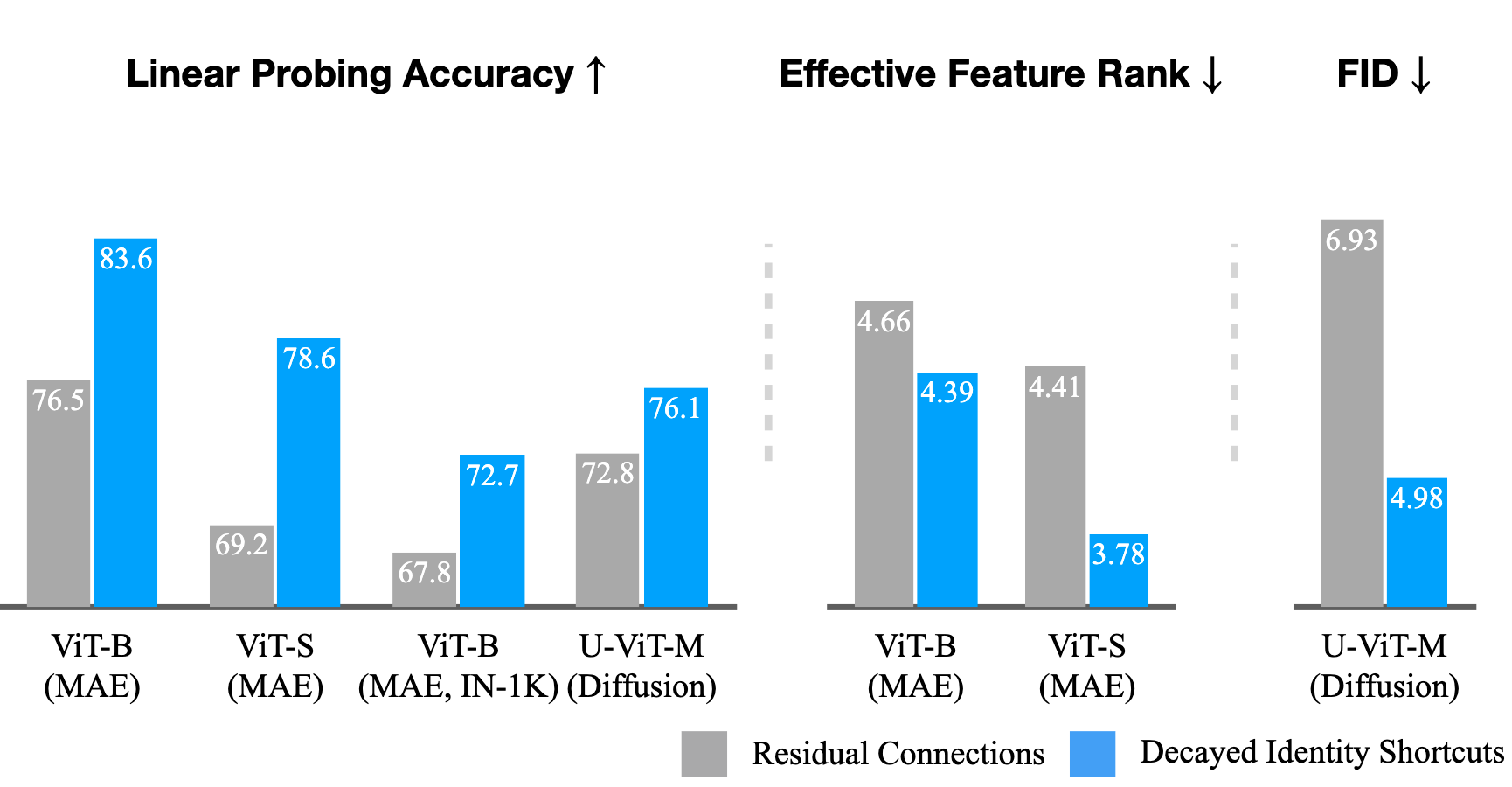

Xiao Zhang*, Ruoxi Jiang*, Will Gao, Rebecca Willett, Michael Maire CVPR 2026 We introduce a novel neural network parameterization that induces low-rank simplicity bias and enhances semantic feature learning in generative representation learning frameworks. By incorporating a weighting factor to reduce the strength of identity shortcuts within residual networks, our modification increases the MAE linear probing accuracy on ImageNet from 67.8% to 72.7%, while also boosting generation quality for diffusion models. |

|

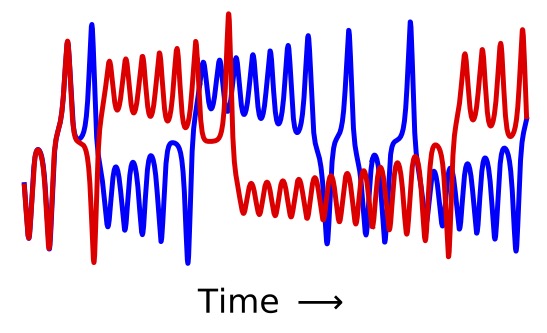

Ruoxi Jiang*, Peter Y. Lu*, Elena Orlova, Rebecca Willett NeurIPS, 2023 poster We introduce two novel approaches: Optimal-transport(OT) based method with prior knowledge of the phsycial property; and Contrastive learning (CL) based method in absence of prior knowledge, to match long-term statistics of chaotic dynamical systems with noisy observations. |

|

Elena Orlova, Aleksei Ustimenko, Ruoxi Jiang, Peter Y. Lu, Rebecca Willett ICML, 2024 We develop a novel deep-learning-based approach to solve time-evolving Schrödinger equation, with inspiration from stochastic mechanics and diffusion models. |

|

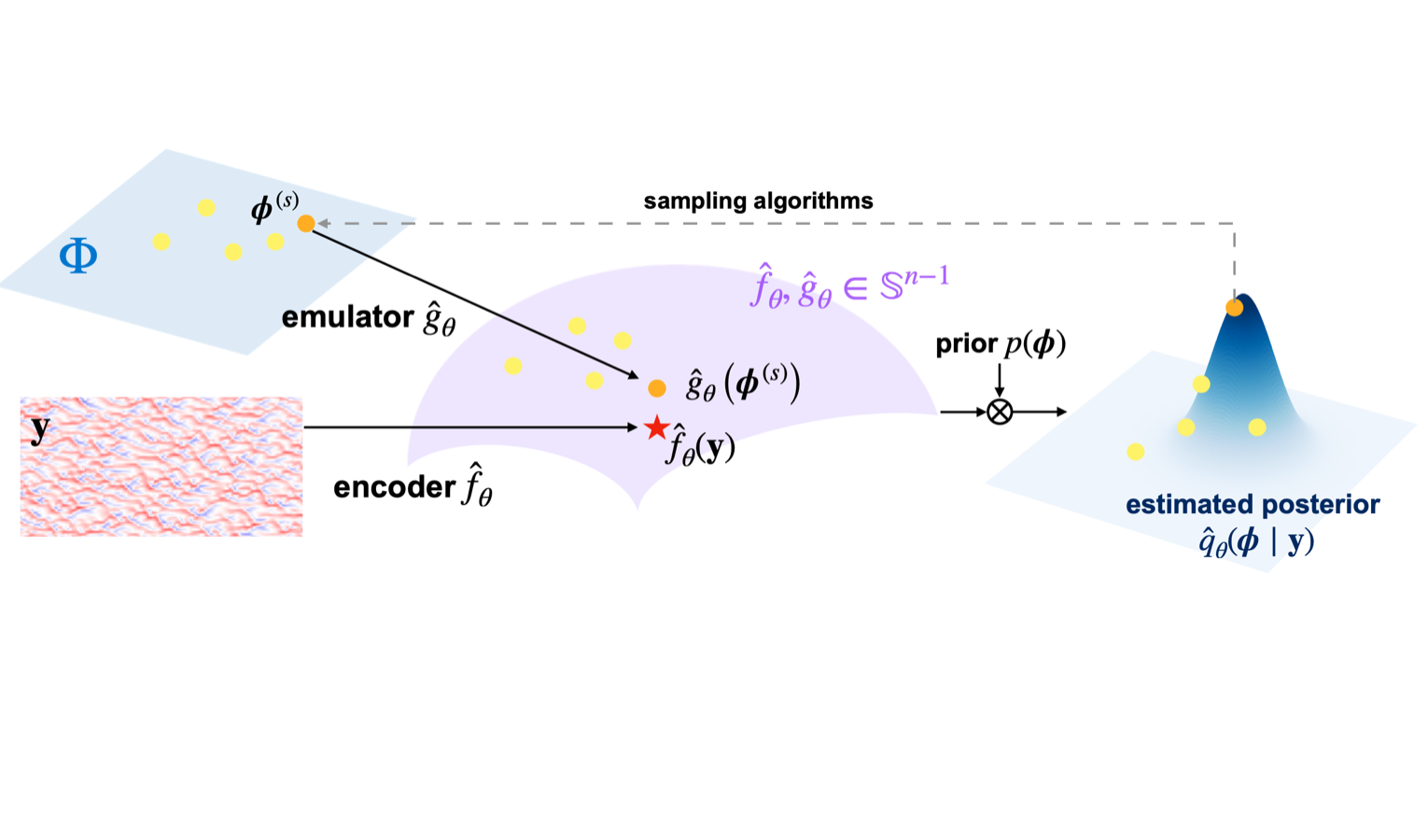

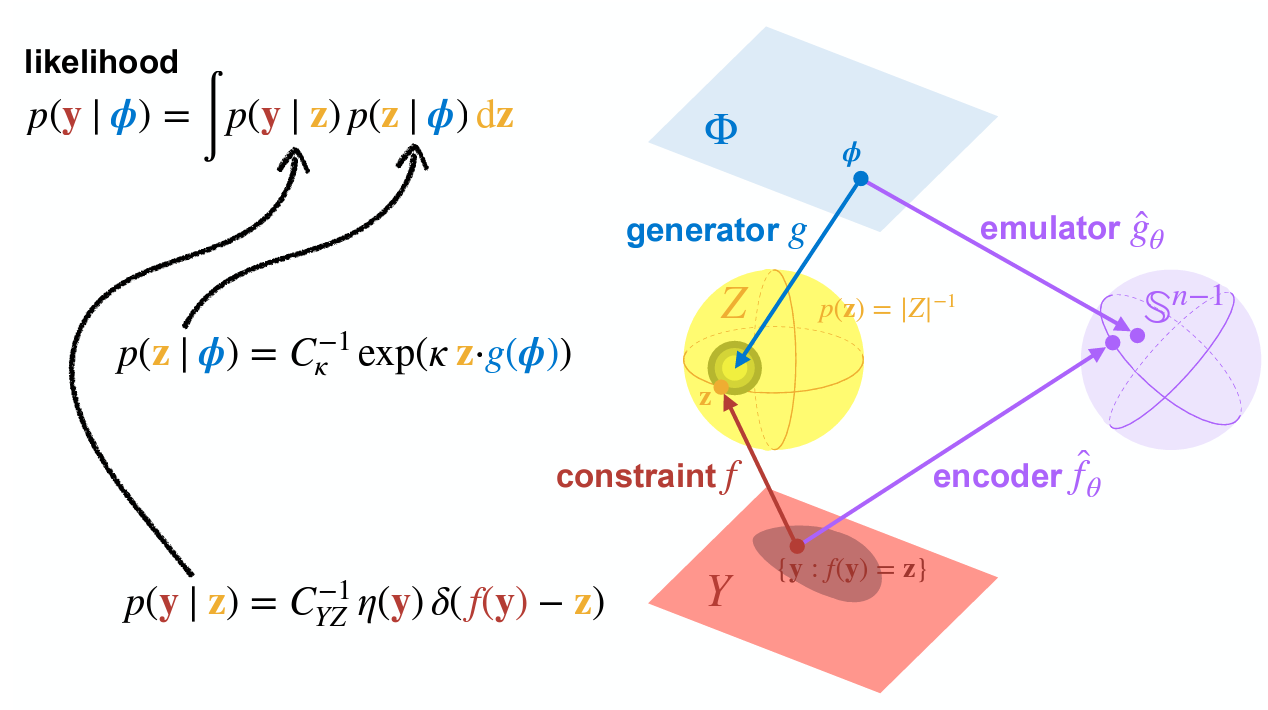

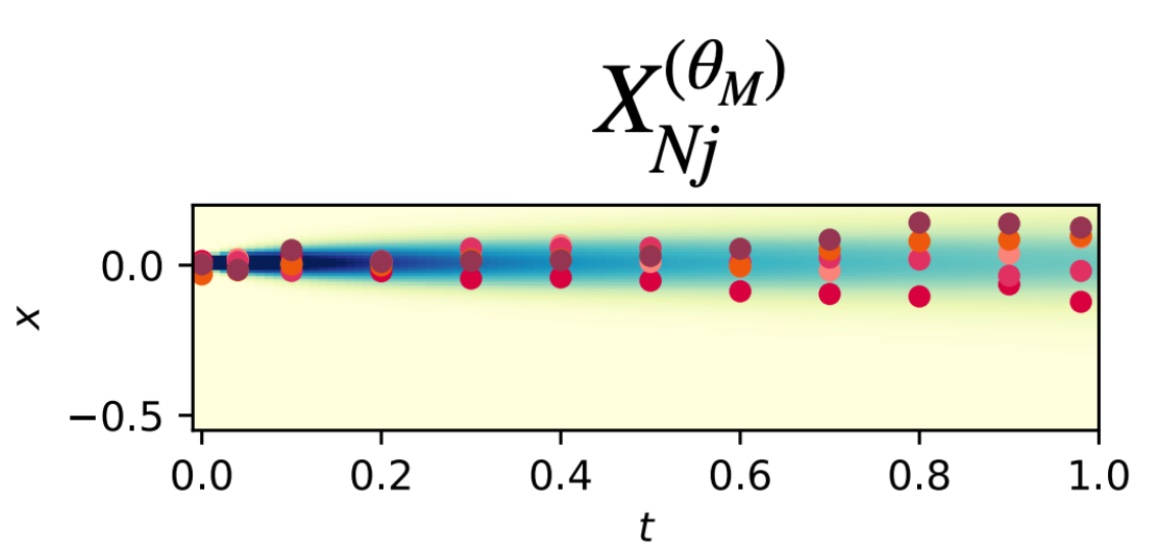

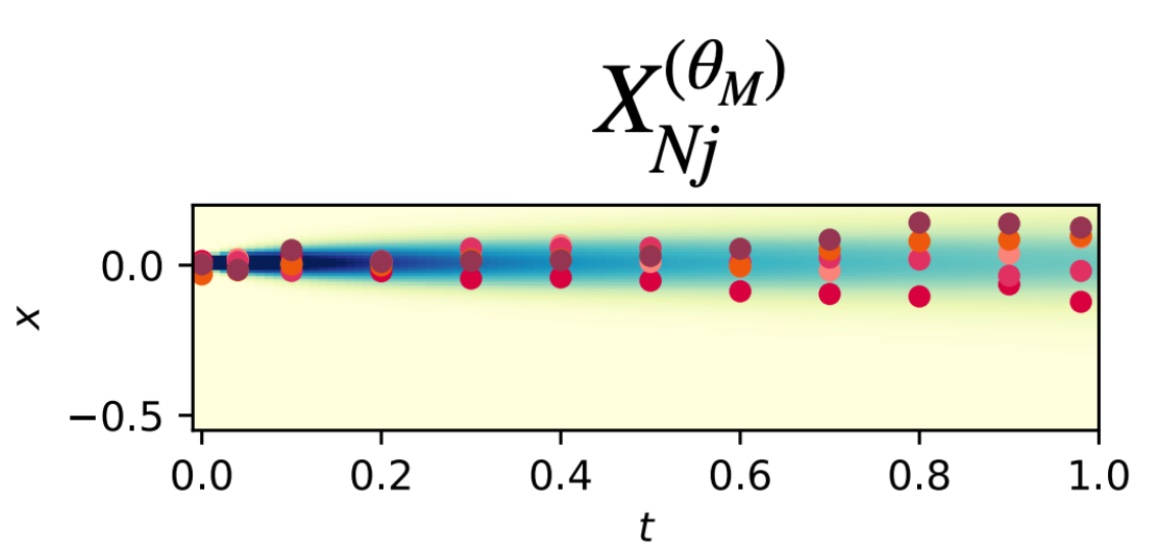

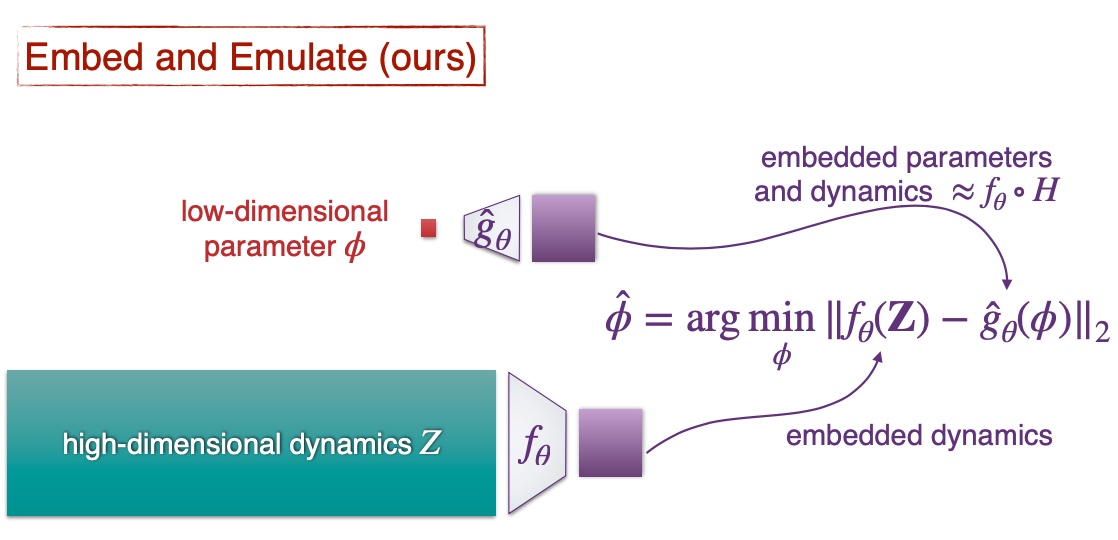

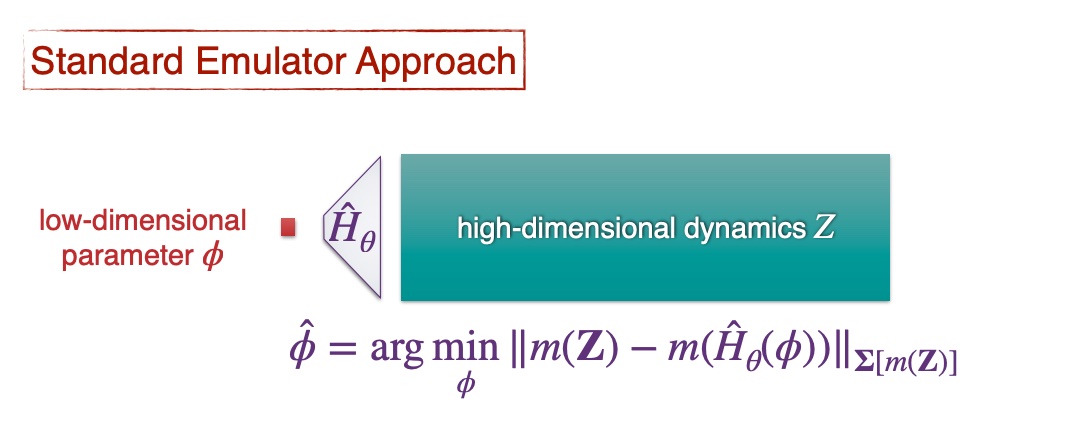

Ruoxi Jiang, Rebecca Willett NeurIPS, 2022 poster / arXiv A novel contrastive framework for learning feature embeddings of observed dynamics jointly with an emulator that can replace high-cost simulators for parameter estimation. |

|

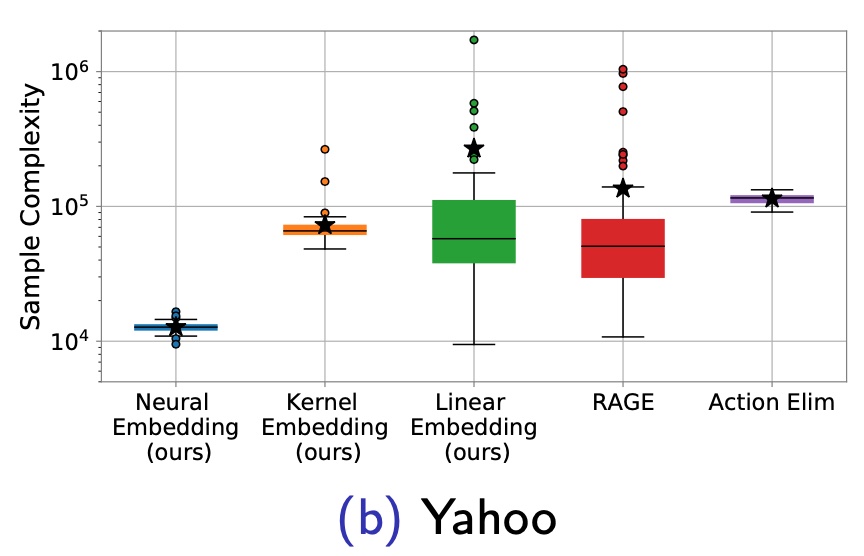

Yinglun Zhu*, Dongruo Zhou*, Ruoxi Jiang*, Quanquan Gu, Rebecca Willett, Robert Nowak NeurIPS, 2021 slides / arXiv To overcome the curse of dimensionality, we propose to adaptively embed the feature representation of each arm into a lower-dimensional space and carefully deal with the induced model misspecifications. |

|

Source code from Dr.Jon Barron. |